Stable Diffusion and Control-Net -A Beginners Guide

Stable Diffusion and Control-Net -A Beginners Guide

Table of contents

- Introduction

- In this article, we will focus on mainly two topics ControlNet and Stable Diffusion

- Prerequisites

- What is Stable Diffusion?

- What is ControlNet?

- Why ControlNet is so Much Popular and How Does it Work?

- What does it do?

- Different Models in ControlNet Stable Diffusion

- Canny Edge Model

- Scribbles

- Depth Map

- Segmentation Map

- Human pose detection

- Straight line detector

- HED edge detector

- Use Cases of Control-Net with Diffusion

- ControlNet Demo using Stable Diffusion

- Conclusion

Introduction

Generative AI technology refers to a category of artificial intelligence (AI) systems capable of generating new content, such as text, images, audio, or video, similar to content produced by humans. These AI models are trained on large datasets and use complex algorithms to learn patterns and generate new content. One such Open-source Text2Image Model which has gained a lot of traction is Stable Diffusion

Though Stable Diffusion has got a lot of features and capabilities, Control-Net which is an extension to Stable Diffusion makes it far more powerful by giving you the capability of creating multiple variations of the same image.

In this article, we will focus on mainly two topics ControlNet and Stable Diffusion

Prerequisites

What is Stable Diffusion?

What is ControlNet?

Why ControlNet is so Much Popular and How Does it Work?

What Does It Do?

Different Models in ControlNet Stable Diffusion

Use Cases of Control-Net with Diffusion

ControlNet Demo using Stable Diffusion

Conclusion

Prerequisites

In this article, we will discuss Stable Diffusion and ControlNet. To run ControlNet, you need to set up a Stable Diffusion environment. If you are not familiar with setting up the environment on a VM, you can refer to our below step-by-step guides for AWS, GCP, and Azure.

For AWS,

For GCP,

For Azure.

What is Stable Diffusion?

- Stable Diffusion is deep learning, text-to-image AI/machine learning model released in 2022. It is primarily used to generate detailed images conditioned on text descriptions, though it can also be applied to other tasks such as inpainting, outpainting, and generating image-to-image translations guided by a text prompt. It was developed by the start-up Stability AI in collaboration with several academic researchers and non-profit organizations.

What is ControlNet?

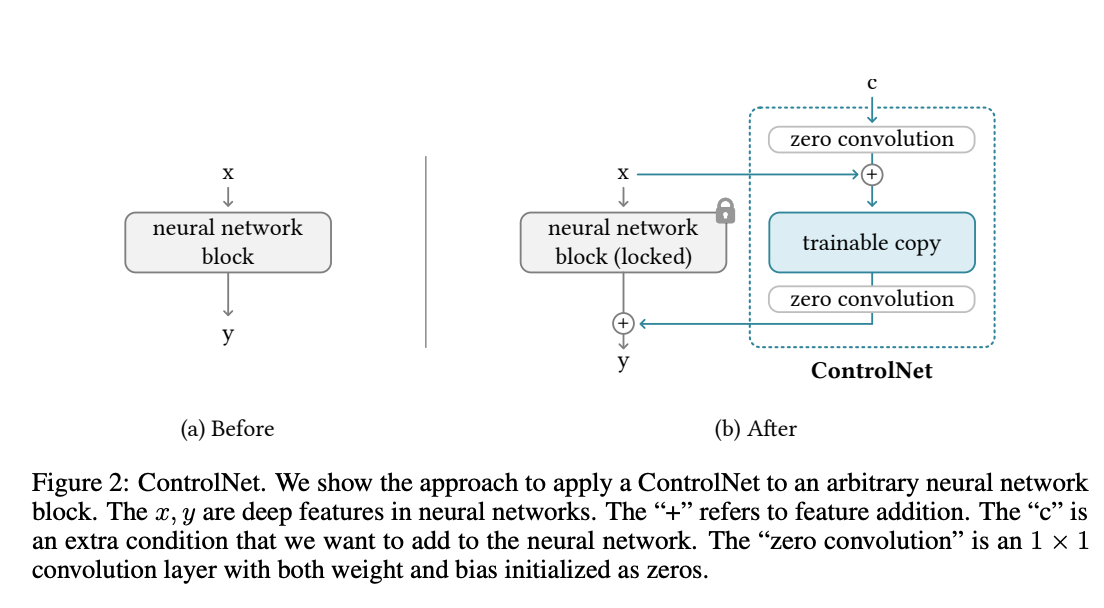

ControlNet is a Neural network structure, architecture, or new neural net Structure, that helps you control the diffusion model, just like the stable diffusion model, with adding extra conditions.

ControlNet can take the existing diffusion model or diffusion and just make changes to the architecture and add whatever you want just like the below picture.

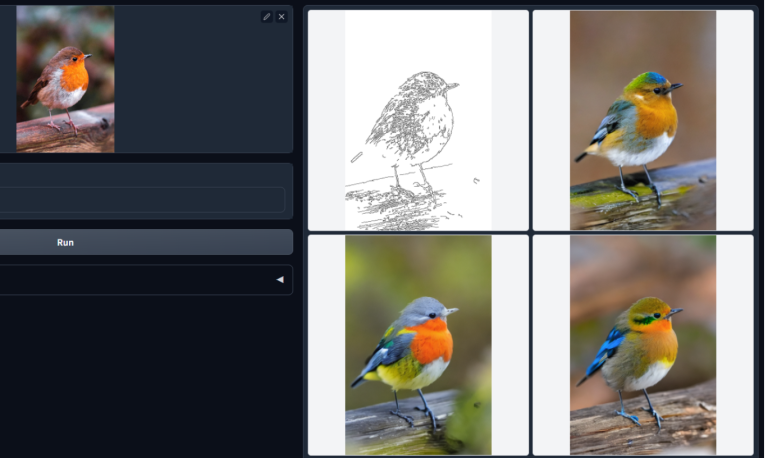

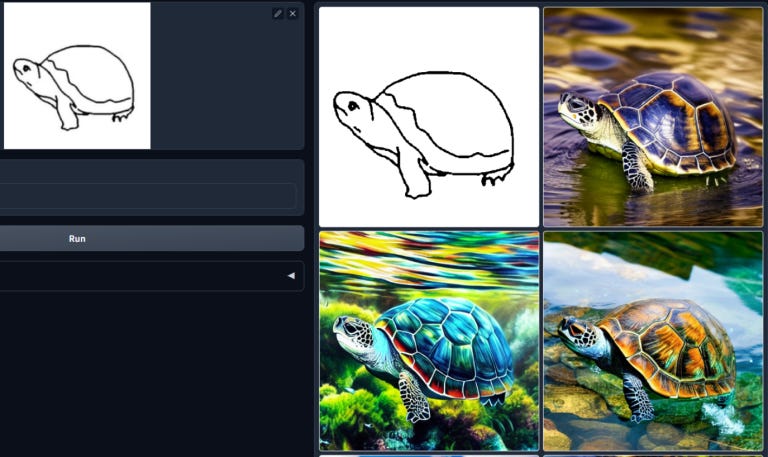

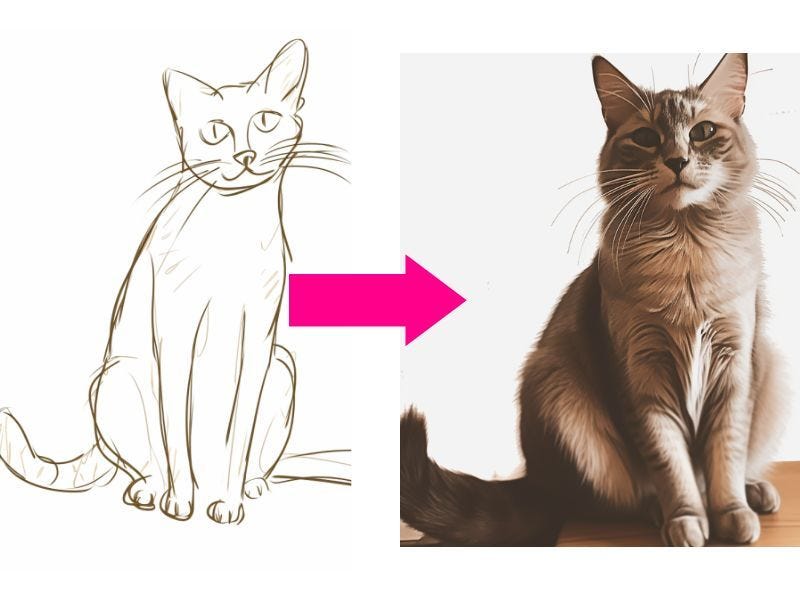

ControlNet can take simple images & scribble images and convert them to build multiple images.

Why ControlNet is so Much Popular and How Does it Work?

ControlNet creates a copy of a neural network and holds one neural network while making changes to another neural network to produce the final output.

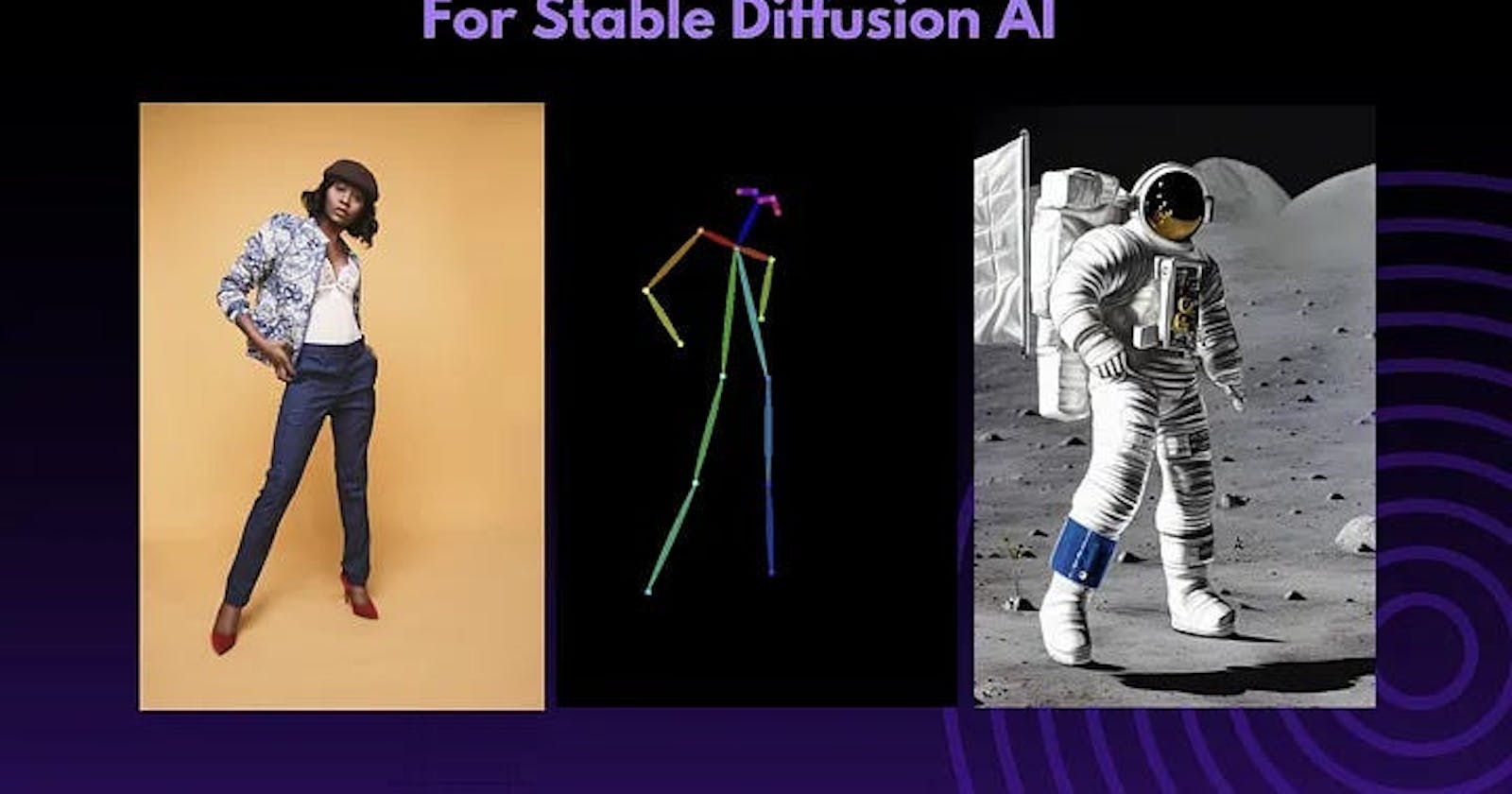

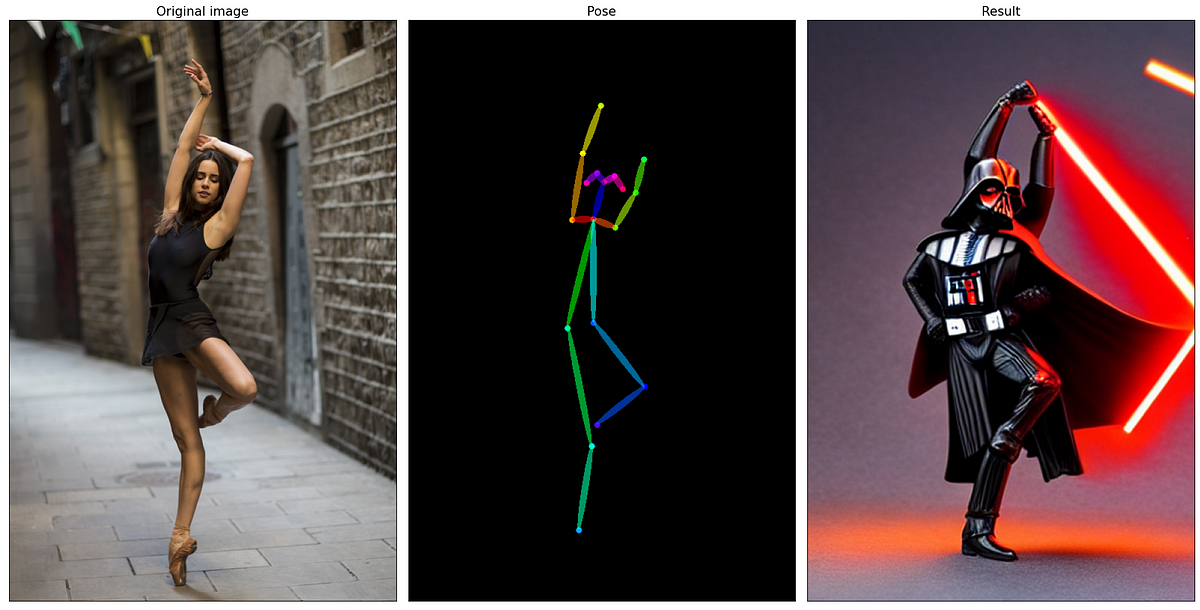

One of its remarkable properties is exemplified when you upload a picture of a man standing, holding the pose, and then using ControlNet to generate new images replacing the man with a kid, a woman, or anything else.

It copies the weights of neural network blocks into a “locked” copy and a “trainable” copy.

The “trainable” one learns your condition. The “locked” one preserves your model.

Thanks to this, the production-ready diffusion models will be protected by training with a small dataset of image pairs.

What does it do?

There are already 50 +public and open ControlNet models available on the Hugging Face hub, with 1200+ likes for the main one, and it is growing as fast as stable diffusion on GitHub.

ControlNet comes pre-packaged with compatibility with several models that help it to identify the shape/form of the target in the image. In this section, we will walk you through each of these with examples.

Different Models in ControlNet Stable Diffusion

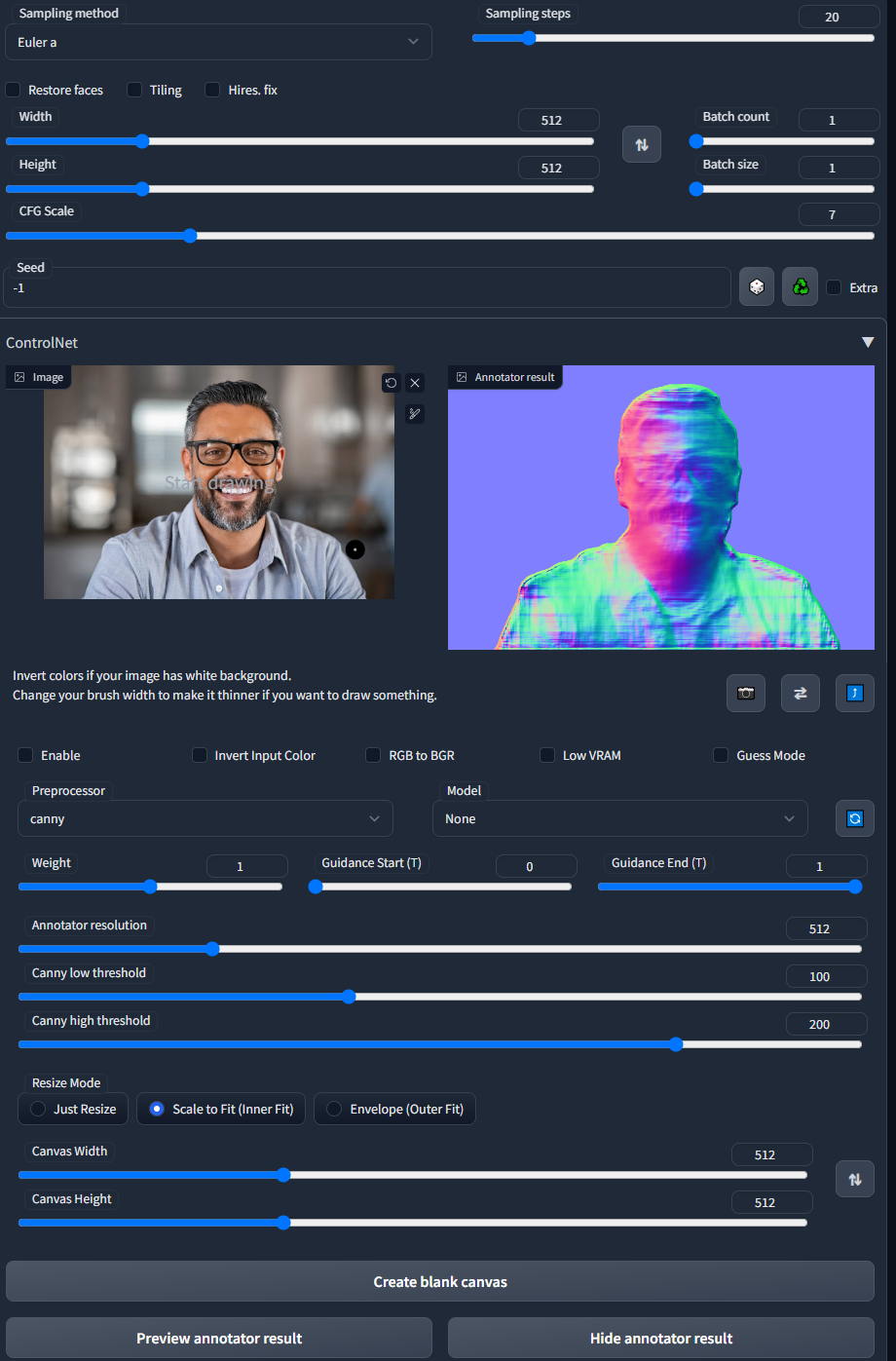

Canny Edge Model

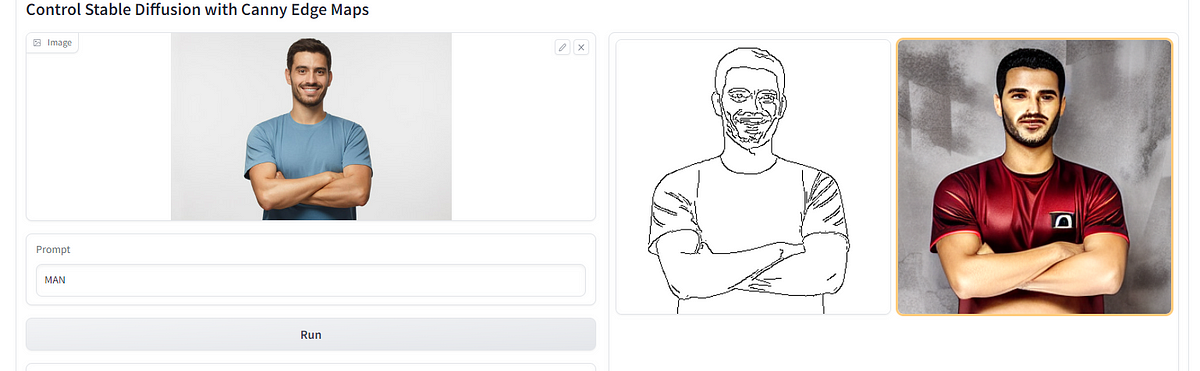

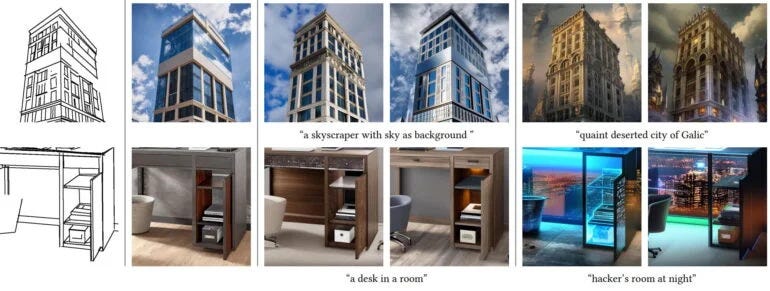

- A canny edge detector is a general-purpose, old-school edge detector. It extracts the outlines of an image. It is useful for retaining the composition of the original image.

Scribbles

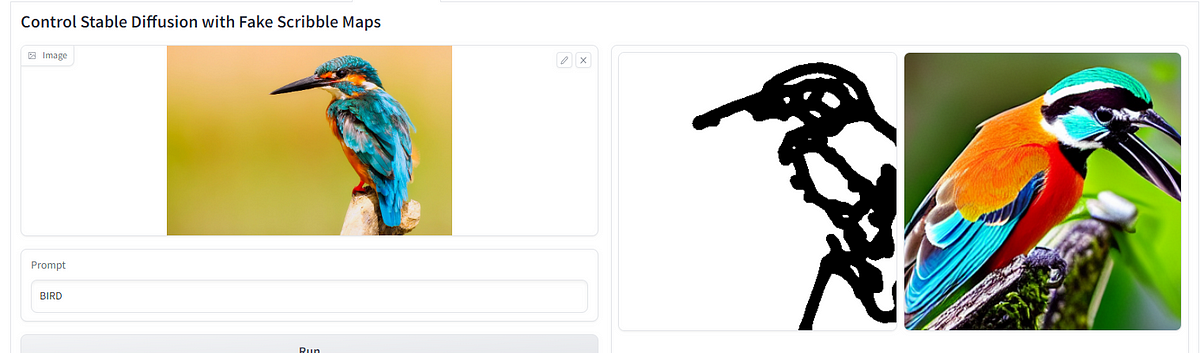

- ControlNet can also turn something you scribble into an image!

Depth Map

- Like depth-to-image in Stable diffusion v2, ControlNet can infer a depth map from the input image. ControlNet’s depth map has a higher resolution than Stable Diffusion v2’s.

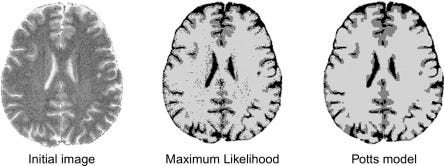

Segmentation Map

- Generate images based on a segmentation map extracted from the input image.

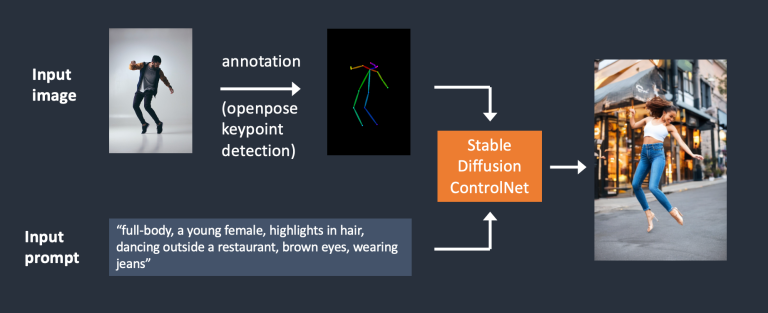

Human pose detection

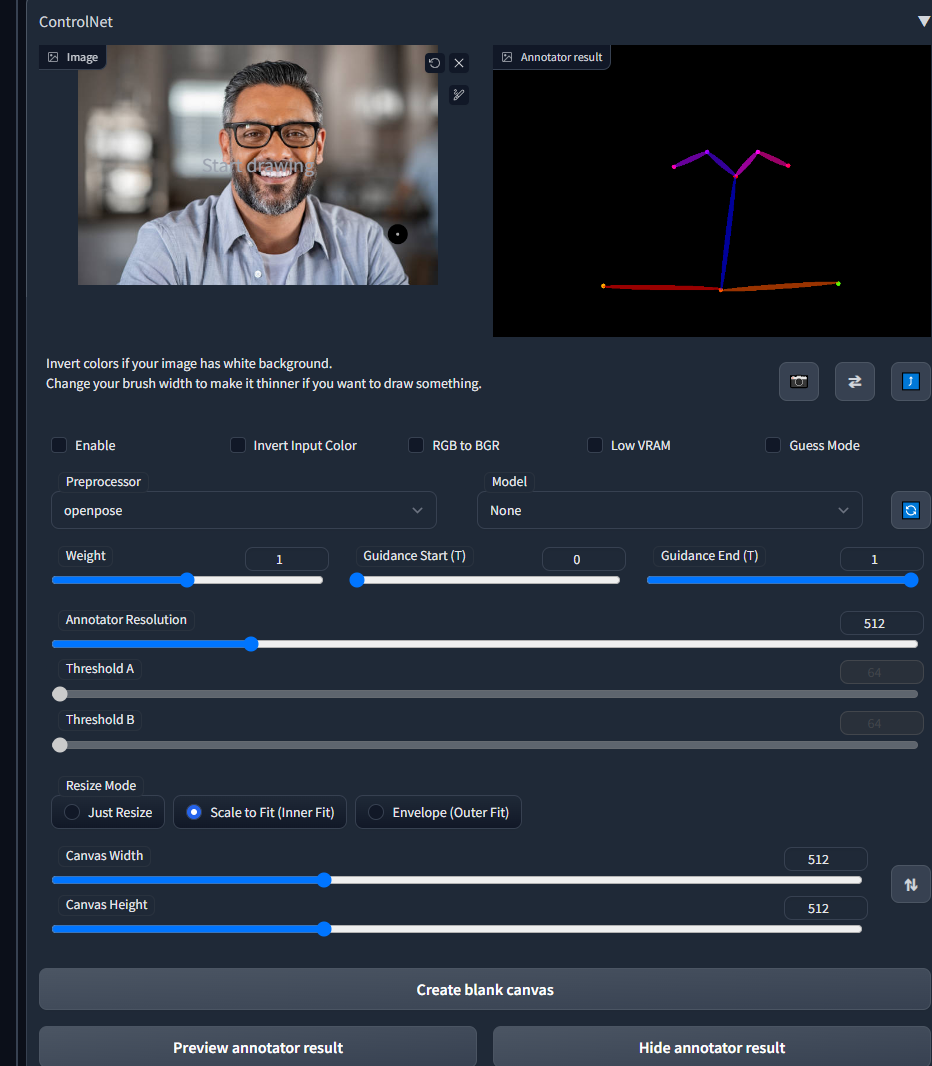

- Openpose is a fast keypoint detection model that can extract human poses like positions of hands, legs, and head. See the example below.

- Below is the ControlNet workflow using OpenPose. Keypoints are extracted from the input image using OpenPose and saved as a control map containing the positions of key points. It is then fed to Stable Diffusion as an extra conditioning together with the text prompt. Images are generated based on these two conditioning.

- OpenPose only detects key points so the image generation is more liberal but follows the original pose. The above example generated a woman jumping up with the left foot pointing sideways, different from the original image and the one in the Canny Edge example. The reason is that OpenPose’s keypoint detection does not specify the orientations of the feet.

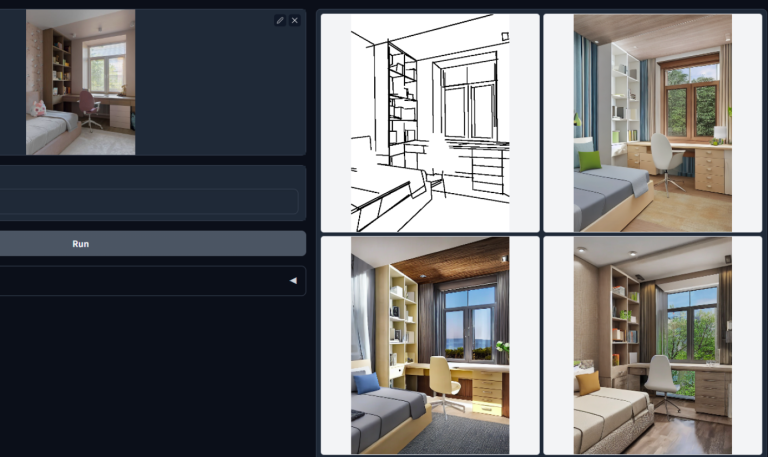

Straight line detector

- ControlNet can be used with M-LSD (Mobile Line Segment Detection), a fast straight-line detector. It is useful for extracting outlines with straight edges like interior designs, buildings, street scenes, picture frames, and paper edges.

HED edge detector

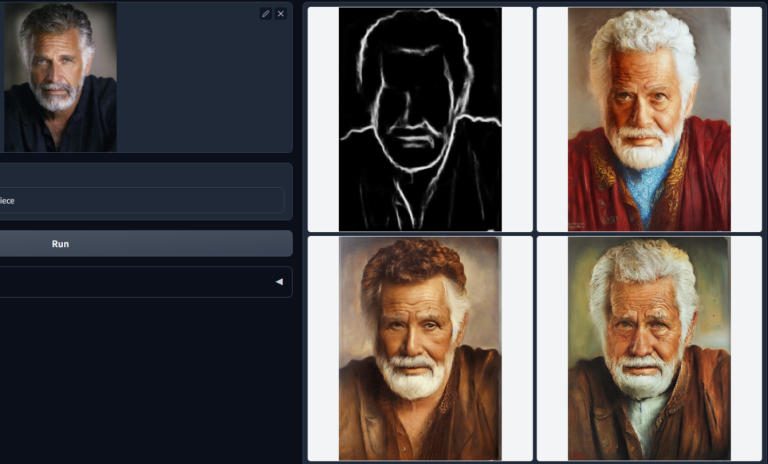

HED (Holistically-Nested Edge Detection) is an edge detector good at producing outlines as an actual person would. According to ControlNet’s authors, HED is suitable for recolouring and restyling an image.

Use Cases of Control-Net with Diffusion

- Image Reconstruction: Control-Net with Stable Diffusion can be used to reconstruct high-resolution images from low-resolution or degraded images. This can be useful in fields like forensics or medical imaging, where it’s important to obtain as much detail as possible from an image.

Object Detection and Recognition: Control-Net with Stable Diffusion can be used to detect and recognize objects in images, even in challenging lighting or weather conditions. This can be useful in fields like autonomous driving or security, where quick and accurate object recognition is critical.

Image Restoration: Control-Net with Stable Diffusion can be used to restore old or damaged images, bringing them back to their original quality. This can be useful in fields like art restoration or historical preservation.

Data Augmentation: Control-Net with Stable Diffusion can be used to generate new and unique data for training machine learning models. This can be useful in fields like computer vision or natural language processing, where having a diverse and representative dataset is important for model accuracy.

3D Reconstruction: Control-Net with Stable Diffusion can be used to reconstruct 3D models from 2D images or video. This can be useful in fields like architecture or virtual reality, where it’s important to create realistic and immersive 3D environments.

ControlNet Demo using Stable Diffusion

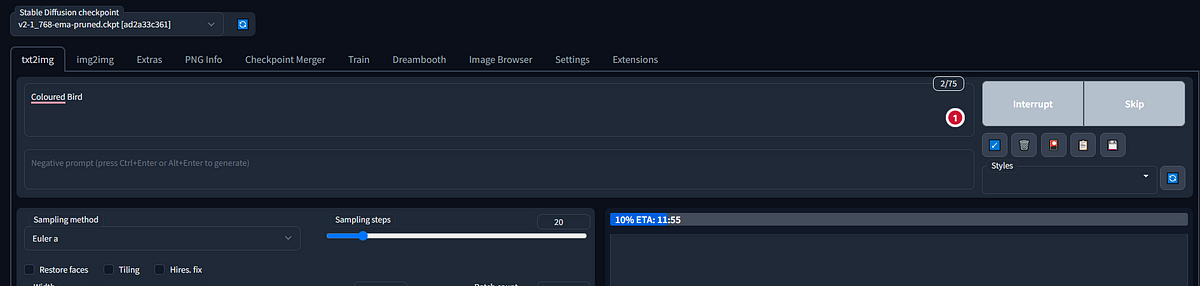

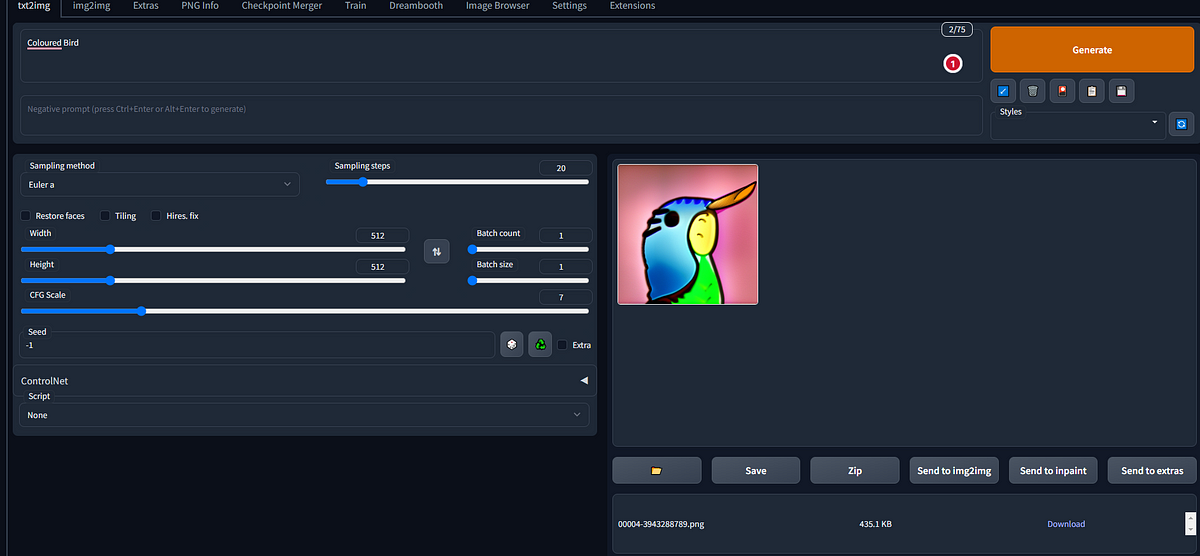

Open the Automatic1111 web interface end browse

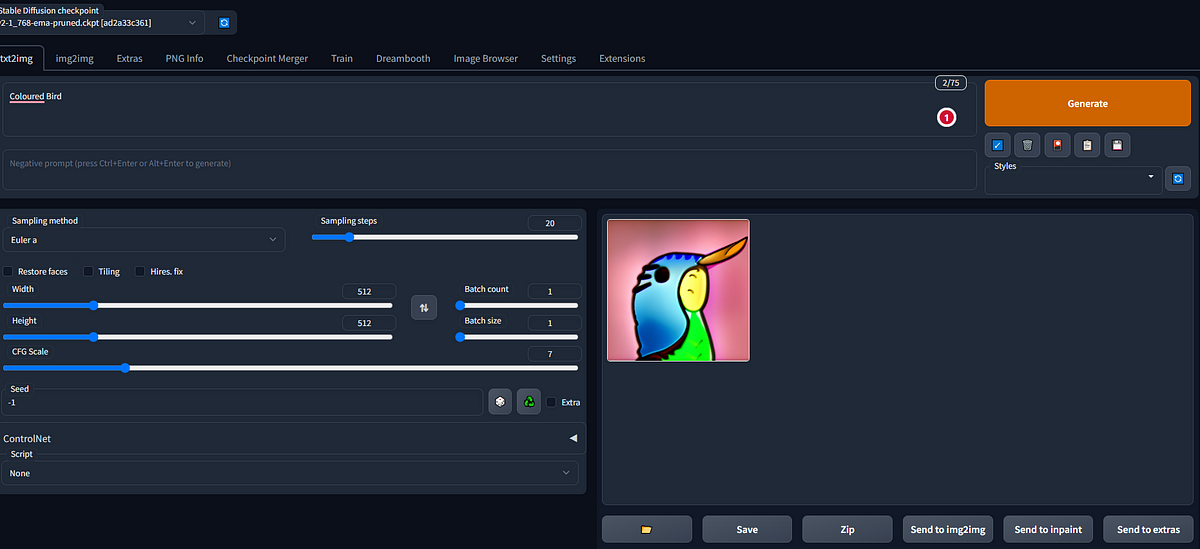

The Stable Diffusion GUI comes with lots of options and settings. The first window shows text to the image page. Provide the Prompt and click on Generate button and wait till it finishes. You can see from the below image, It will take a few minutes to generate the image.

- You can see the image below. I can provide the prompt ‘Coloured Bird,’ and after clicking the generate button, it will give output.

Output

- As you can see from the attached screenshots, there are numerous options available to save the generated image, make a zip file, send it to img2img, send it to paint, and more. You can explore all the available options to see which ones suit your needs best. For your reference, I have attached two screenshots below.”

You can see the Download Image option after clicking on the save image button.

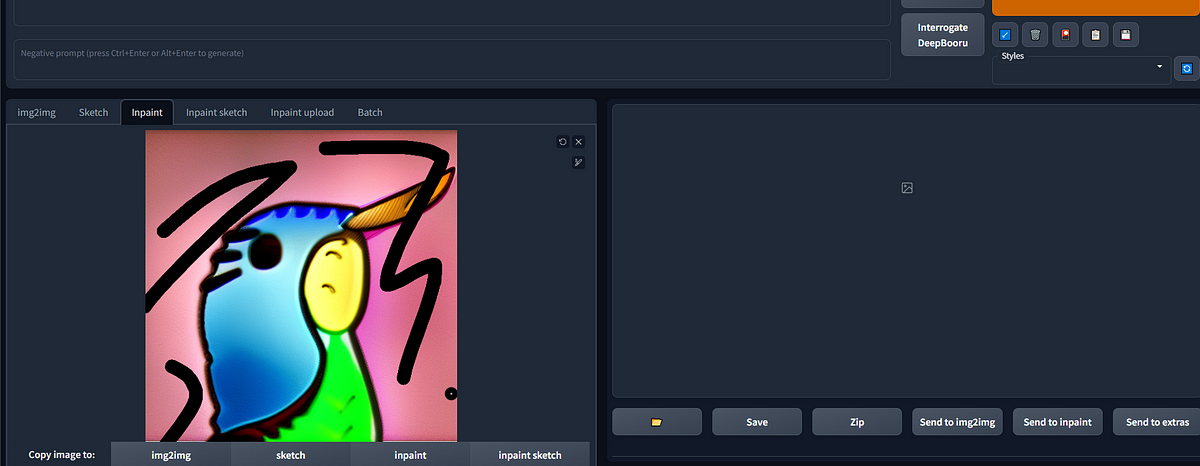

You can do Inpaint in the generated image.

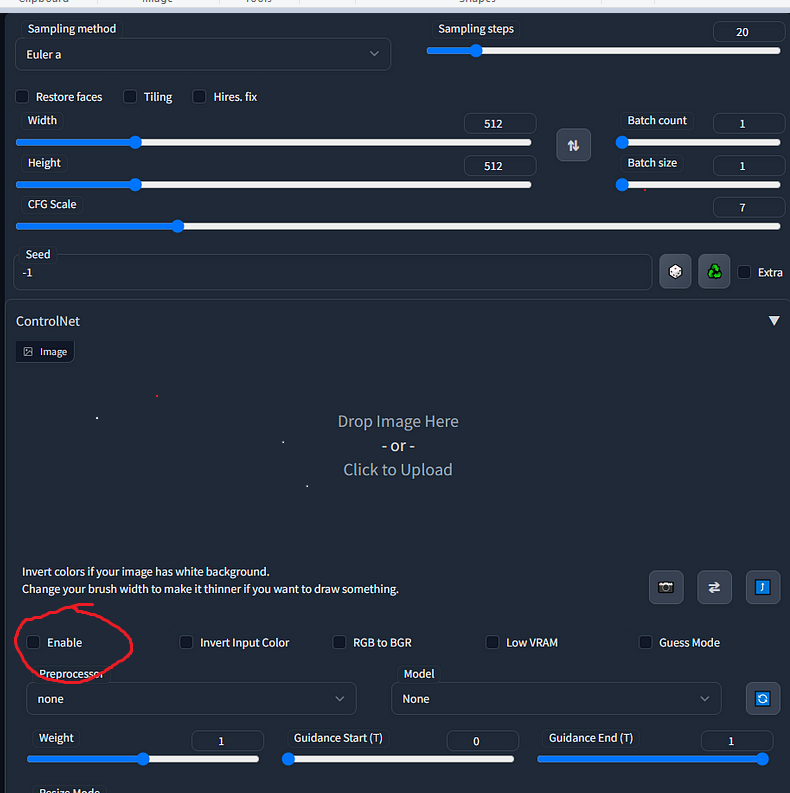

- We can try ControlNet now. You can find the ControlNet option below. I have attached a screenshot for your reference. You can Upload and Drop images from your machine also. Or You can also Drag & Drop You Generated images.

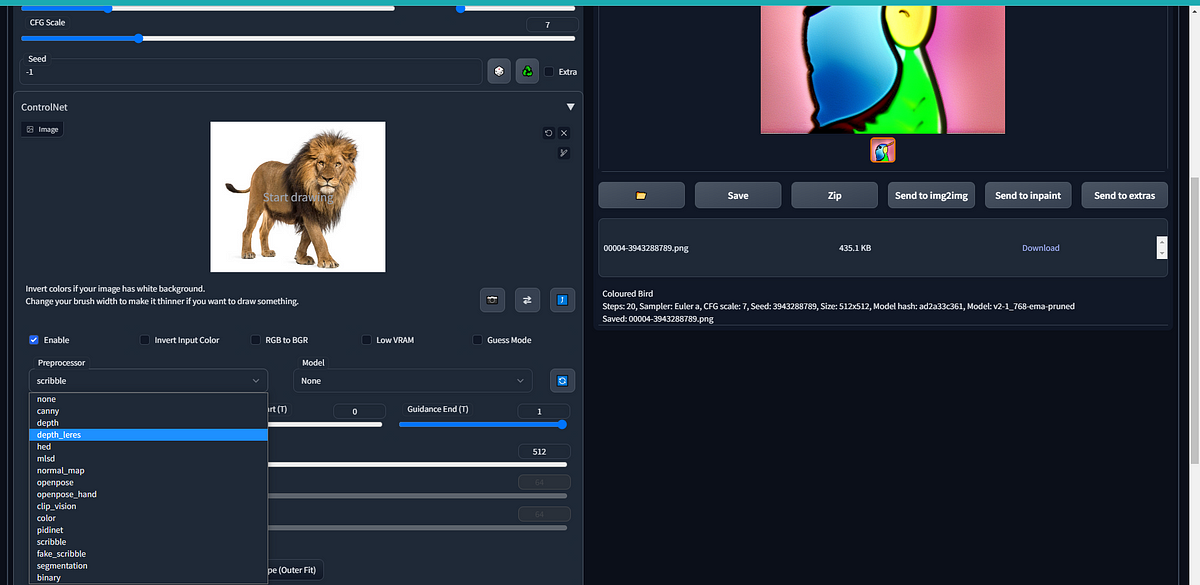

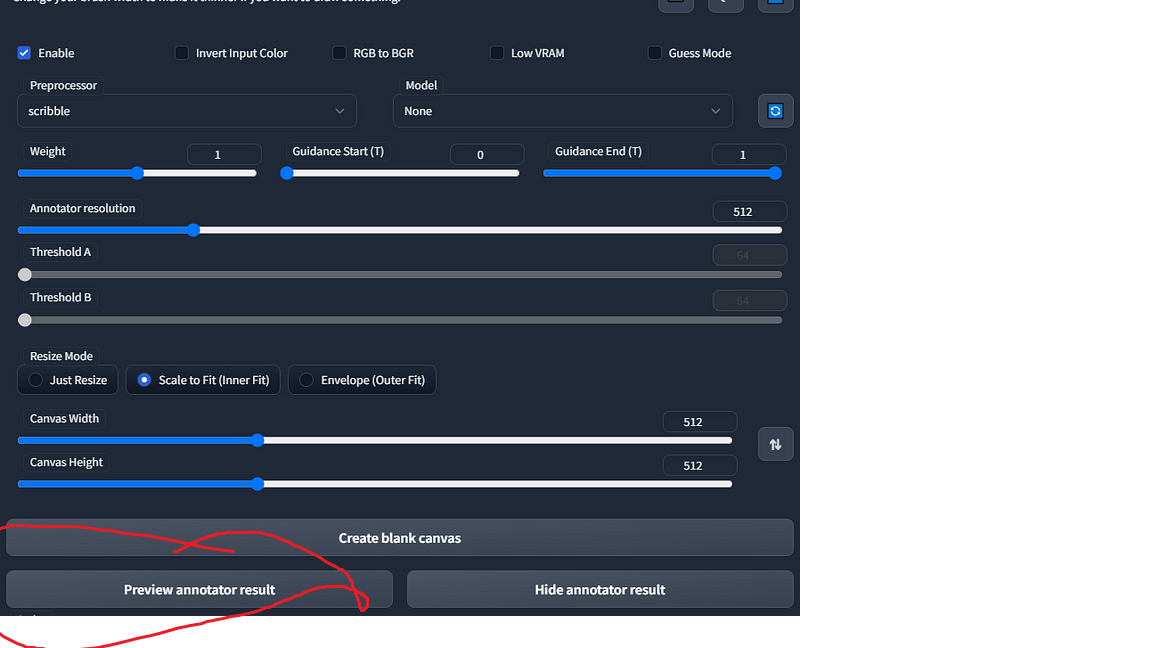

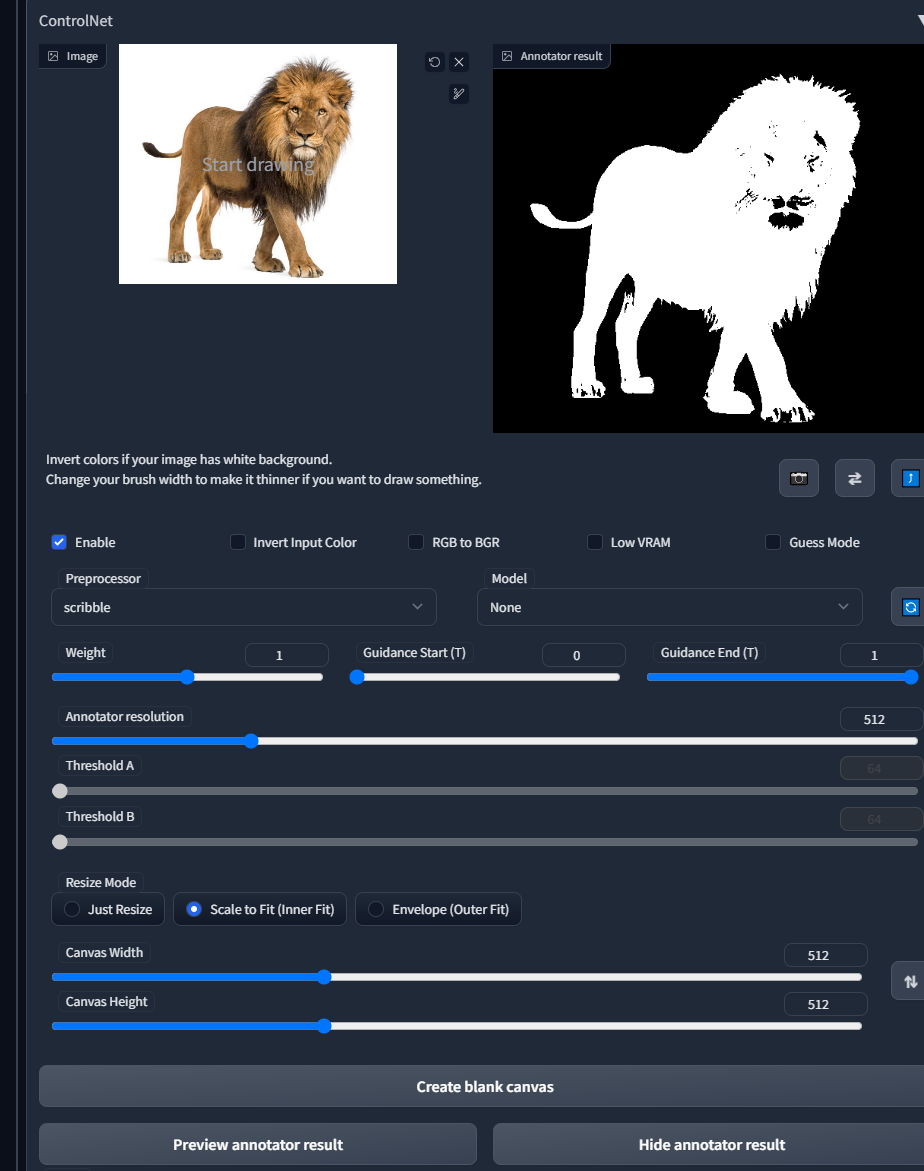

- I am Uploading an Image of a Lion from my machine, now we can try ControlNet on this image, tick the enable option, and now you can select the preprocessor and model according to your image and choice.

Select the Preprocessor and Model of Your Choice.

- After selecting all the Things, Now click on the Preview annotator result Button, This will Give You the Output.

- I have used the Scribble Preprocessor, and you can see the output in the image below. You can also select the model; I used ‘No Model’, but you can choose the one that suits your needs. Without a model, you can also create images. According to Your need, You can select all the things like Annotator resolution, Threshold A, Threshold B, weight, Canvas Width, Height, and so on.

Scribble

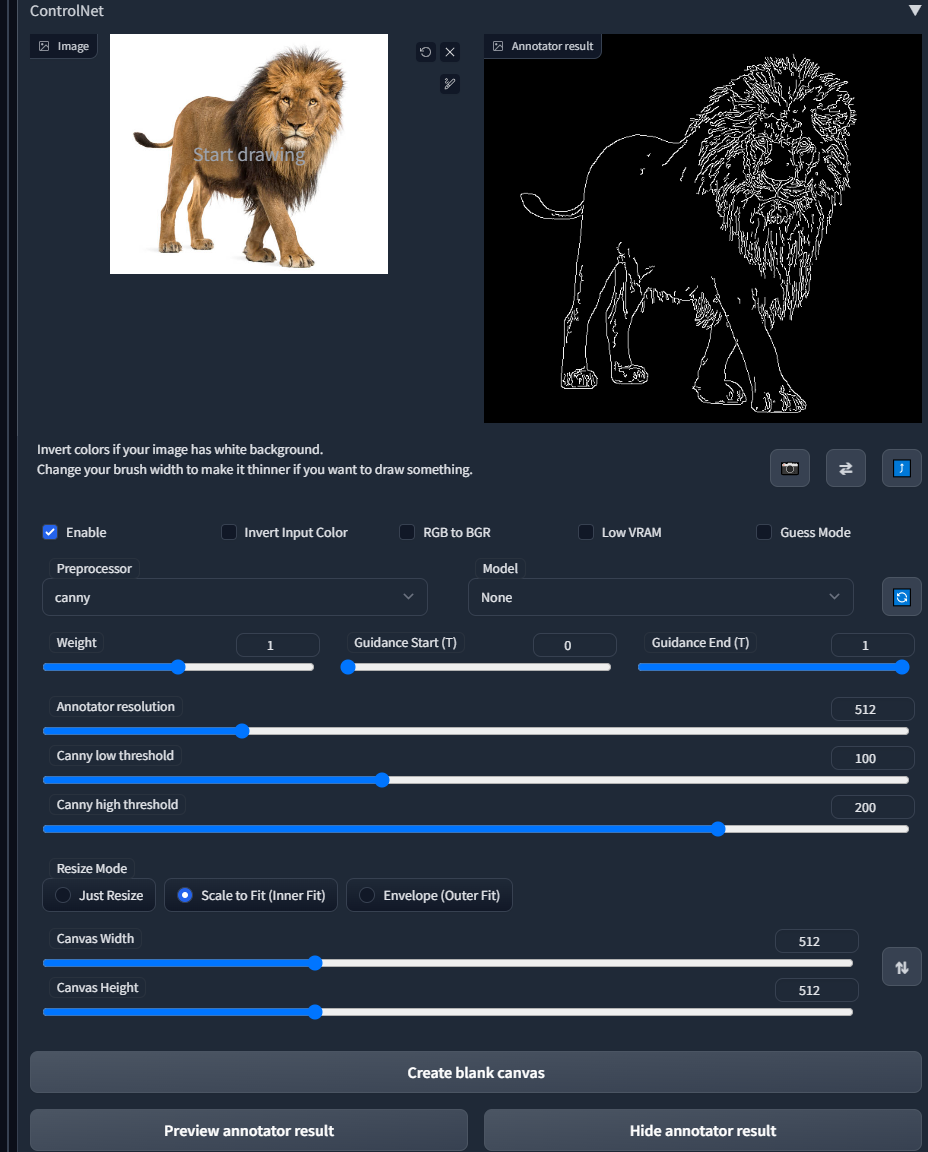

- Now I Try Canny Preprocessor and you can see the output in the image below. According to Your need, You can select all the things like Annotator resolution, Canny low threshold, Canny high threshold, weight, and so on.

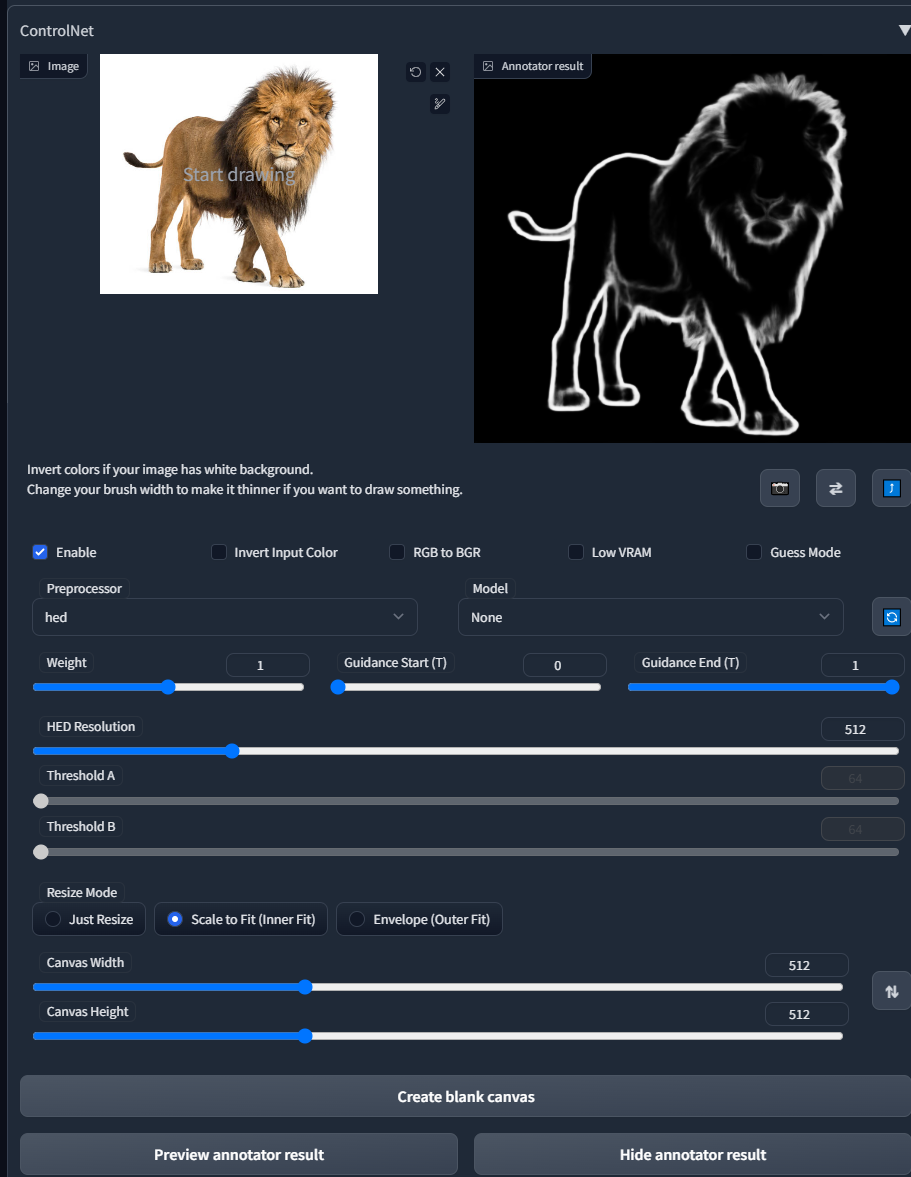

- Next, I Try, HED Preprocessor, and you can see the output in the image below. According to Your need, You can select all the things like HED Resolution, Threshold, and so on.

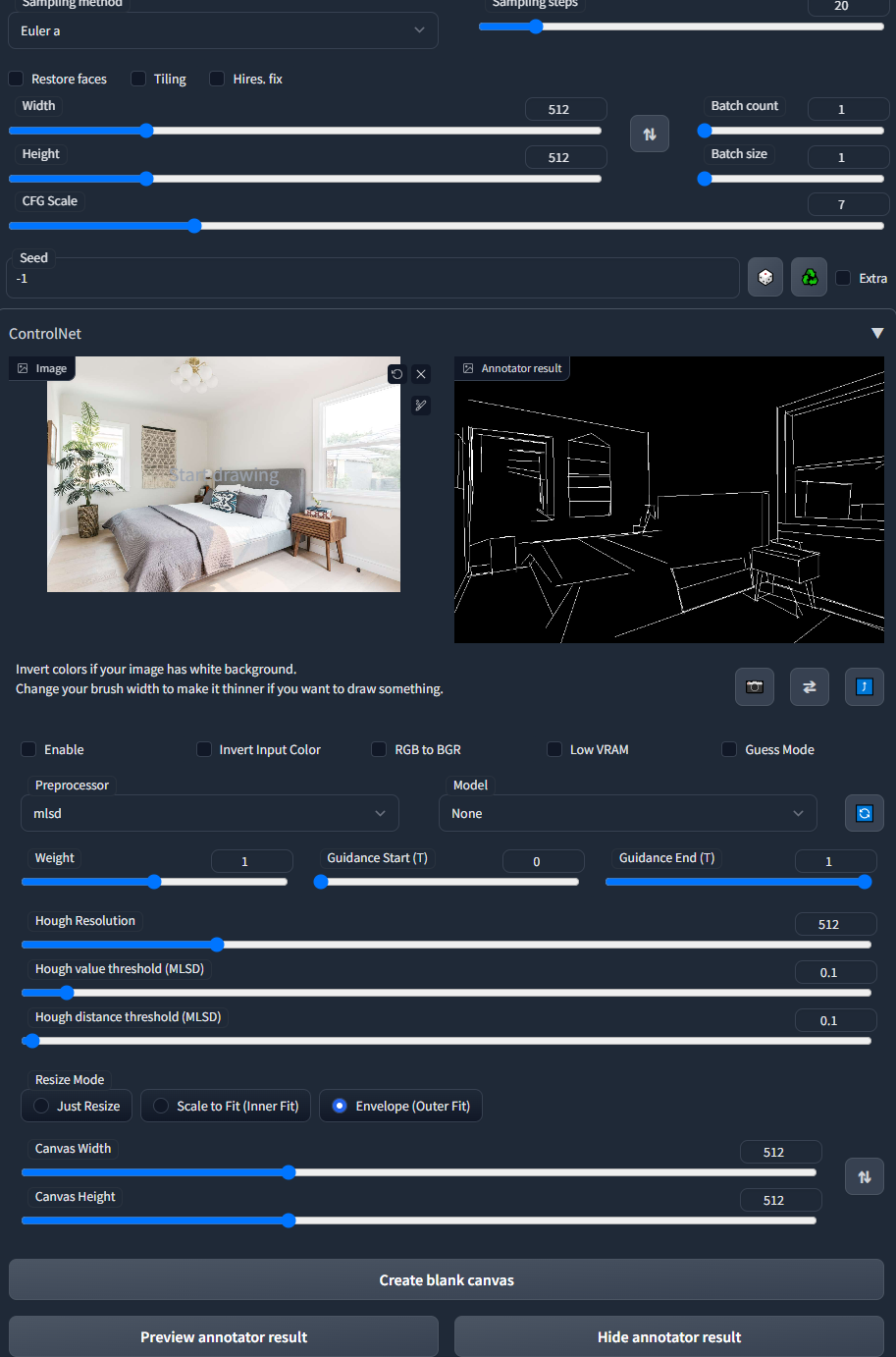

- Now We Can Try ControlNet Stable Diffusion with MSD.

ControlNet Stable Diffusion with MSD

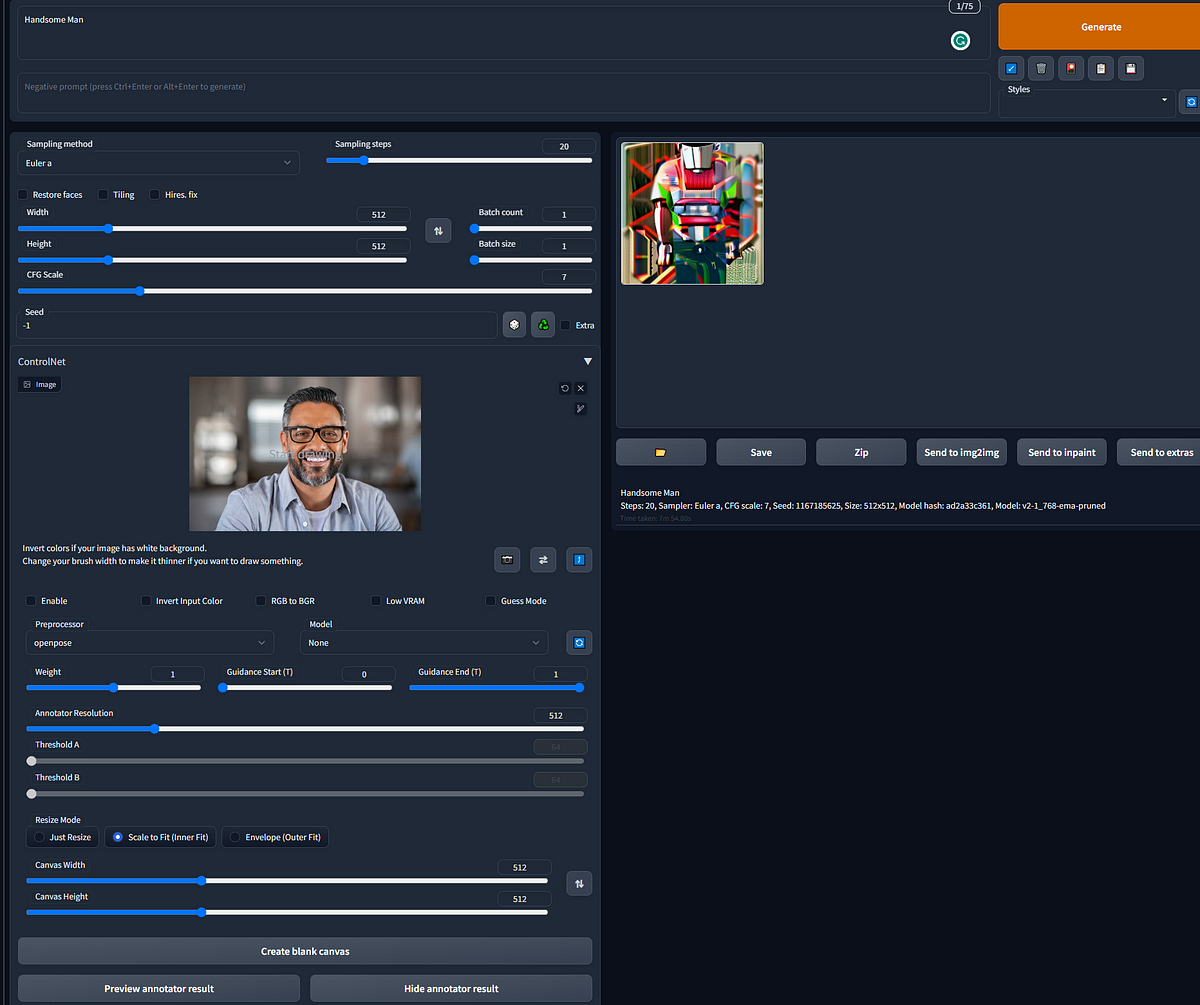

- Now I am Given the Prompt “Smart Man” and select one image from my machine, so this is the Output, you can see the below image.

- I have now selected the Openpose preprocessor, and you can see the output in the image below.

- I have now selected the Canny preprocessor, and you can see the output in the image below.

ControlNet with Canny Edge

Conclusion

In this Article, We explain the ControlNet Features and Provided a Step by Step Guide using ControlNet on Automatic 1111 Stable Diffusion Interface. If you find this article helpful and want to try ControlNet with Stable diffusion, Please check our below article to set up Stable Diffusion with ControlNet. You can find the link below.